When folks discuss AI today, we often hear the term “deep learning”. What is this? How does a machine learn “deeply”? The typical inscrutable prop talk sounds something like this:

Deep learning is a subset of machine learning that utilizes multi-layered artificial neural networks to model complex patterns and representations in large-scale data through hierarchical feature extraction and non-linear transformations.

Did that clear things up? Yeah, me either. So, here’s a simple analogy for the rest of us illustrating how a deep learning algorithm (DLA) might learn to identify cats by looking at photographs.

Kitty Clues: How DLAs Learn to Spot Cats

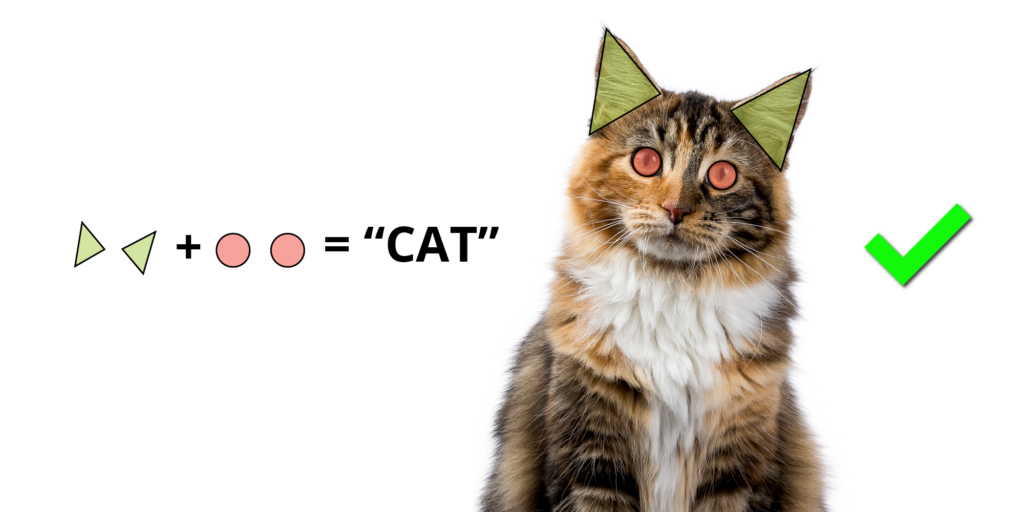

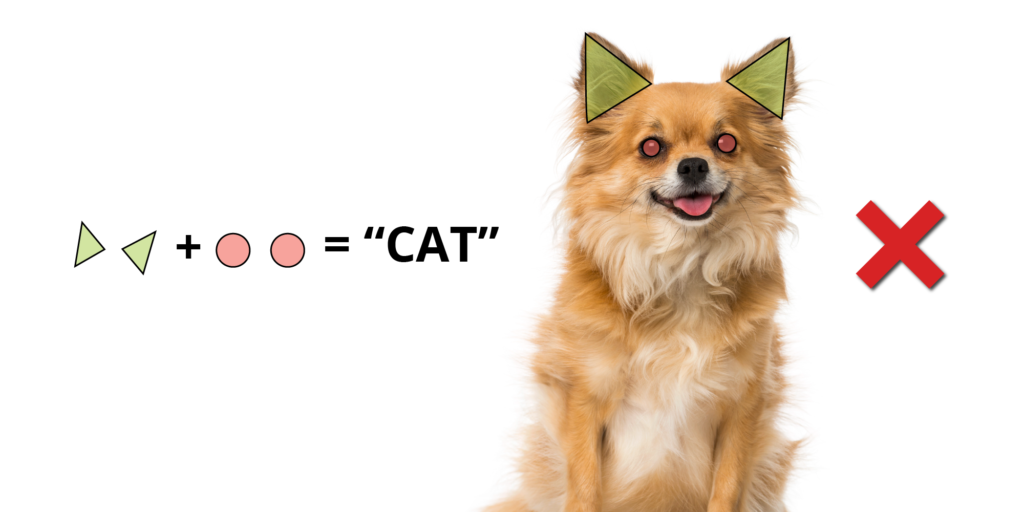

Let’s imagine a DLA examining a real photo of a cat and looking for patterns within those fluffy feline features. For instance, the DLA might note that cats generally have two triangular-shaped ears and two circular eyes. Initially, it might apply the overly general rule: “2 triangles + 2 circles = CAT” to its guessing strategy. It would also assign an importance or “weight” to these attributes. The higher the weight, the more influence the attribute has on the final prediction.

Let’s say, hypothetically, after the DLA saw its first photo of a cat, it weighted having two triangle-shaped ears as high importance (i.e., 10) and gave the same weight for having two circular eyes. Thus, we could illustrate the DLA’s “thinking” with this equation:

2 triangular ears (weight 10) +

2 circular eyes (weight 10) = “CAT”

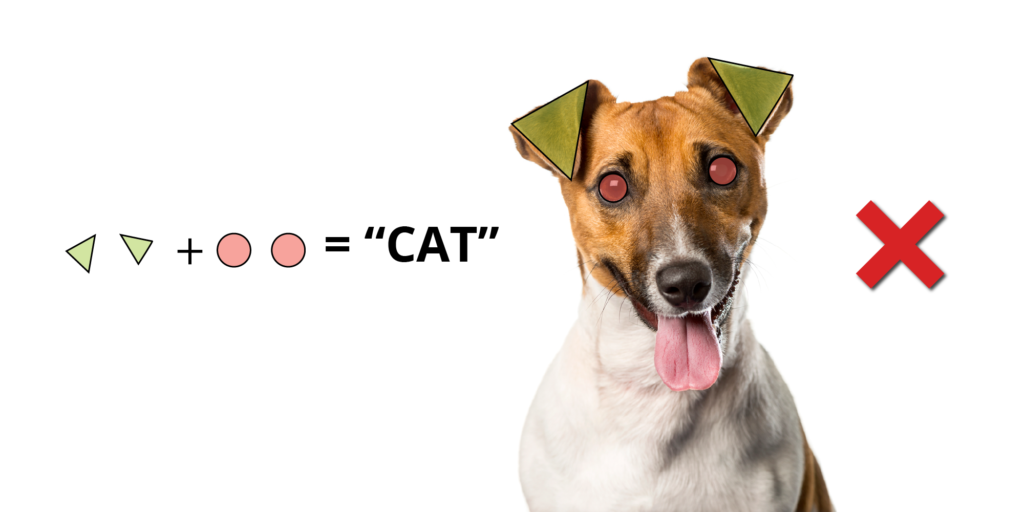

However, once it encounters a dog with similar features, this inference would return a false result. That’s because its current understanding of “CAT” is much too general. When humans make this same mistake, we call it a bias. The same applies to AI. After misidentifying a dog as a cat, the DLA goes back and adjusts its formula for “CAT.” This reevaluation process is called “backpropagation.” Let’s see how it works.

Paws and Effect: Fine-Tuning Feline Features

To improve its accuracy, the DLA would look for other feline features (i.e., parameters) within photos and adjust its current weights. This process of adjusting weights is done through a mathematical technique called gradient descent. Gradient descent helps the DLA minimize its errors by finding the optimal values for the weights.

Let’s listen in to a personified DLA as it works through the “CAT” problem:

“I thought for sure that two triangles and two circles equaled ‘CAT,’ but that’s apparently not the case. I better change my weights until I know more. Going forward, I’m going to weight the importance of having two triangles for ears a ‘5’ and having two circles for eyes at ‘5’ also. These characteristics don’t seem to be as important as I first thought. Now let’s look again at the image I got wrong and compare it to the one I got right.

While the two triangles appear in both, I now see they are oriented differently in the ‘not CAT’ one. In fact, those triangles are pointing downward compared to the correct image. Therefore, triangle orientation must be an important consideration. I think I’ll add triangle orientation as a new feature of ‘CAT’ and assign having an orientation of ‘pointing upwards’ a weight of 10. So, if I see triangles for ears pointing upwards, I’m more confident it’s a ‘CAT.'”

The DLA now sets out with its new backpropagated formula and weights for “CAT”:

2 triangular ears (weight 5) +

Ear orientation upwards (weight 10) +

2 circular eyes (weight 5) = “CAT”

However, let’s see how this new formula plays out when the DLA is unexpectedly presented with a photo of a Chihuahua. The DLA confidently marks the diminutive canine a “CAT” only to be presented with an annoying buzzer sound and “Wrong!” message.

Let’s listen in again to the DLA’s reaction:

“WTF! How can that NOT be a ‘CAT’!…Ok, I gotta calm down…So you’re telling me two triangular ears pointed up and two circular eyes isn’t a ‘CAT’. What else is going on here? I mean…wait…what’s this thing in the nose area? Looks like the ‘for sure CAT’ pic had a triangular-shaped nose…and this new one has a square-shaped nose. Let’s check the one before…Hey! That one’s got a square nose too. Ok, I’m on to something here…So if it’s got two triangular ears oriented upwards with two eyes AND a triangular-shaped nose, then it’s got to be a ‘CAT.’ Let’s try that…Yes!…wait…”

Cat-astrophic Errors: Learning from Mistakes

And so, it goes with the DLA as it struggles to refine its definition of things by constantly adjusting its weights, adding and removing parameters, and improving its accuracy through trial and error. Along the way, it will constantly run into exceptions for its formula. It will eventually consider such fine-tuned parameters as whisker length, eye pupil shape, body posture, and fur texture. It will even note that a “DOG” tends to have its tongue out when getting its photo taken while a “CAT” will only offer a soulless, stoic stare into the camera.

Conclusion

While this analogy is a wildly over-simplification of the process of deep learning, it should serve as a basic template for understanding the process. It should also illustrates why there’s a need for vast amounts of data and computational power to “train” AI. It should also be noted this analogy uses a simple static photo of a cat; imagine the amounts of data, compute, and real-time backpropagation required for AI to understand road conditions for a self-driving car! It’s mind-boggling.

This complexity is also why AI is often seen as a “black box”; researchers don’t fully understand what’s happening under the hood of AI because the number of factors is just too large. It’s like counting grains of sand in a wind storm.

Resources for Folks Curious Like a “CAT”

If you found this analogy helpful and want to dive deeper into the world of AI and deep learning, here are some resources to get you started:

- Books:

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville: A comprehensive textbook that covers the fundamentals and advancements in deep learning.

- “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron: A practical guide to building and training neural networks.

- Online Courses:

- Coursera: Deep Learning Specialization by Andrew Ng: A popular series of courses that cover the basics and applications of deep learning.

- edX: Introduction to Artificial Intelligence (AI) by IBM: A course that provides an overview of AI, machine learning, and deep learning.

- Websites and Tutorials:

- TensorFlow.org: Tutorials and guides on using TensorFlow, an open-source platform for machine learning.

- Kaggle.com: A platform for data science competitions and learning, offering datasets and tutorials to practice deep learning.

- Communities and Forums:

- Reddit: Subreddits like r/MachineLearning and r/DeepLearning where enthusiasts and professionals discuss the latest in AI.

- Stack Overflow: A Q&A site where you can ask questions and find answers related to programming and machine learning.

By exploring these resources, you can build upon the simple analogy provided here and gain a deeper understanding of how deep learning and AI work.