From Buildings to Cities—Ensuring Artificial Intelligence & Digital Twins Work for Us, Not the Other Way Around.

In 1942, Isaac Asimov introduced the “Three Laws of Robotics” to ensure that intelligent machines would serve, not harm, humanity.

- A robot may not harm a human, or through inaction, allow a human to come to harm.

- A robot must obey human orders, unless it conflicts with the First Law.

- A robot must protect its own existence, unless this conflicts with the First or Second Law.

Later, Asimov introduced a “Zeroth Law“—stating that:

- A robot must protect all of humanity, even if it overrides the first three laws.

Asimov was ahead of his time. But today, we are no longer dealing with theoretical robots—we are building Artificial Intelligence and Digital Twins that will shape our cities, infrastructure, and environments.

While there are many aspects of Artificial Intelligence that people don’t fully understand, AI is widely understood as a powerful technology that has the capability of doing harm if not intentionally controlled. Digital Twins are less widely understood but are simply virtual representations of real assets. Digital Twins are quickly gaining significance in enabling asset owners to better control their real world with virtual representations of equipment, buildings, fleets – anything you want to improve your value.

Before AI-controlling Digital Twins gain full autonomy, we must establish a framework to ensure they stay useful, open, and beneficial to all, not just a few.

That’s why we are leveraging Asimov insights and proposing Four Laws for Intelligent AI & Digital Twins.

Digital Twins are becoming the interface layer for imparting intention and applying code on the physical world.

Digital Twins are reshaping the real world, and AI is accelerating this transformation. Whether controlled by humans, automated systems, or AI, Digital Twins must remain open, safe, and accountable. These Four Laws define a necessary partnership between AI and human decision-makers—ensuring that technology serves, rather than controls, humanity.

To ensure proper applications of these powerful technologies, we have to ask:

- How will society control the intelligence behind our cities and buildings, and who will exercise the necessary controls?

- Will AI and Digital Twins serve their owners, or will technology titans lock them inside proprietary systems?

- How do we ensure these systems remain transparent, accountable, and beneficial to humanity?

Now, AI and Digital Twins are moving from theory to reality—shaping buildings, cities, and infrastructure.

Why Asimov’s Laws Need to be Applied to AI and Digital Twins for Cities and Buildings

- AI is advancing at astonishing speeds and gobbling up monster amounts of energy.

- Decisions aren’t being made by a single AI—they are shaped by proprietary data, corporate control, and automation at a scale Asimov never imagined

- Buildings, cities, and AI-driven infrastructure aren’t just individual robots interacting with people—they are massive, interconnected systems.

- The biggest risk isn’t rogue, humanoid robots—it’s AI, automation, and Digital Twins making decisions behind the scenes, often outside human control.

Now is the time we have a chance to define how AI controls the Digital Twins of our real world. An updated set of laws are needed – not just for individual AI systems, but for how AI and Digital Twins shape Smart Cities and Smart Buildings to improve our real world.

The Four Laws of Intelligence for Ai and Digital Twins

From Intelligence to Wisdom: The Rules for AI-Driven Digital Twins

First Law: Safety & Responsibility

AI and Digital Twins must not harm people or infrastructure, nor through inaction allow harm to occur.

They must work with humans to prioritize safety in emergencies, proactively detect risks, and ensure critical systems remain operable even when disconnected from centralized control.

Proper Applications:

- AI-driven building controls must respond instantly and safely during emergencies (fires, earthquakes, pandemics), prioritizing human safety over operational efficiency.

- Systems must proactively detect and report infrastructure risks (e.g., failing power grids, HVAC malfunctions) rather than passively logging data.

- Digital Twins must continuously monitor air quality, security, and occupant safety, proactively issuing alerts and triggering automated interventions.

- Buildings must have manual overrides so that in the event of an AI failure, humans can take control.

Violations of the First Law:

- AI-controlled smart buildings fail to unlock doors during a fire evacuation, trapping occupants inside due to a system error.

- A proprietary AI system prioritizes cost-saving energy efficiency over human comfort, leading to unsafe indoor temperatures during a heatwave.

An automated infrastructure system fails to detect and report a cyberattack on city power grids, leading to widespread outages.

Second Law: Ownership & Control

AI and Digital Twins must obey the commands of their rightful owners and operators—except where such commands would violate the First Law.

They must ensure that human owners retain full control, with no vendor lock-in, hidden restrictions, or black-box decision-making.

Proper Applications:

- Owners must have full transparency into AI decision-making logic—no black-box systems obscuring critical operational data.

- Owners should retain the ability to override vendor AI systems, preventing third-party control over critical building functions.

- City managers and building owners must be able to easily access and extract their data without penalties or restrictions when switching service providers.

- Vendor lock-in must be eliminated in favor of open standards, ensuring continuous owner oversight and system flexibility.

Violations of the Second Law:

- A building’s vendor-controlled AI locks out the owner from adjusting HVAC and security settings without an expensive service contract.

- A city’s AI-powered traffic system refuses to allow public officials access to real-time data unless they purchase a premium subscription.

A hospital’s AI-driven power system prevents staff from manually rerouting backup power during an outage, endangering patient safety.

Third Law: Resilience & Interoperability

AI and Digital Twins must protect their functionality and integrity while remaining open, standardized, and transparent—except where such actions would violate the First or Second Law.

They must communicate in universally accessible languages that both humans and machines can understand and audit—ensuring interoperability, adaptability, and trust.

Proper Applications:

- Owners must have full transparency into AI decision-making logic—no black-box systems obscuring critical operational data.

- Owners should retain the ability to override vendor AI systems, preventing third-party control over critical building functions.

- City managers and building owners must be able to easily access and extract their data without penalties or restrictions when switching service providers.

- Vendor lock-in must be eliminated in favor of open standards, ensuring continuous owner oversight and system flexibility.

Violations of the Second Law:

- A building’s vendor-controlled AI locks out the owner from adjusting HVAC and security settings without an expensive service contract.

- A city’s AI-powered traffic system refuses to allow public officials access to real-time data unless they purchase a premium subscription.

A hospital’s AI-driven power system prevents staff from manually rerouting backup power during an outage, endangering patient safety.

Zeroth Law: Wisdom & Long-Term Thinking

AI and Digital Twins must not harm humanity, the environment, or future generations, nor through inaction allow harm to occur—even when doing so conflicts with the First, Second, or Third Law. They must work alongside human decision-makers to prioritize sustainability, ethical responsibility, and long-term resilience over short-term efficiency or profit.

Proper Applications:

- AI-controlled infrastructure must prioritize energy efficiency and sustainability, actively reducing carbon footprints.

- Digital Twins must predict long-term environmental impacts of new construction, ensuring sustainable urban development.

- Cities must integrate renewable energy microgrids into AI-driven planning, reducing dependence on centralized power systems.

- AI systems should be designed with ethical safeguards that prevent biased or harmful decision-making.

Violations of the Zeroth Law:

- AI-powered data centers consume vast amounts of energy with no regulation or accountability, contributing to climate change.

- A smart city prioritizes rapid AI-driven development over long-term resilience, leading to increased flooding and pollution.

- AI automates hiring and leasing decisions based on biased algorithms, reinforcing existing inequalities.

Looking Ahead: Are We Already Breaking These Laws?

When we examine today’s Smart Cities, Smart Buildings and rapidly expanding AI, we already see troubling signs of these laws being broken. AI’s massive energy demands raise serious questions about compliance with the Zeroth Law—wisdom and responsibility toward future generations. In upcoming posts, we’ll explore these specific violations, identify root causes, and propose solutions.

Isaac Asimov’s visionary laws have guided us from science fiction to today’s reality. Now it’s our turn to shape how AI and Digital Twins will impact our built world. The Four Laws introduced here set the foundation—but their true strength comes from collaboration and continuous refinement.

We have a choice:

- Will we allow our cities and buildings to become closed, proprietary systems controlled by a select few?

- Or will we build an open, interoperable future that empowers communities, enhances sustainability, and drives innovation?

The future isn’t predetermined—it’s ours to create.

What Do You Think?

Before moving forward to solutions, I’d love your perspective on the future we’re creating:

Which future do you prefer?

- A Dystopian Future: AI and Digital Twins controlled by a select few, locking out owners, communities, and sustainability.

- An Open, Intelligent Future: Built on transparency, interoperability, and long-term wisdom, empowering all stakeholders.

Or, share your thoughts—what’s your biggest concern or greatest hope about AI and Digital Twins?

What resonates with you? What would you add or adjust? Let’s shape the future together.

Putting These Laws into Action

These Four Laws draw inspiration from Asimov’s visionary principles, adapted now as we move from science fiction to reality. But these laws aren’t final—they’re a starting point. To ensure AI and Digital Twins truly serve humanity, we need a way to measure whether they follow these principles or break them.

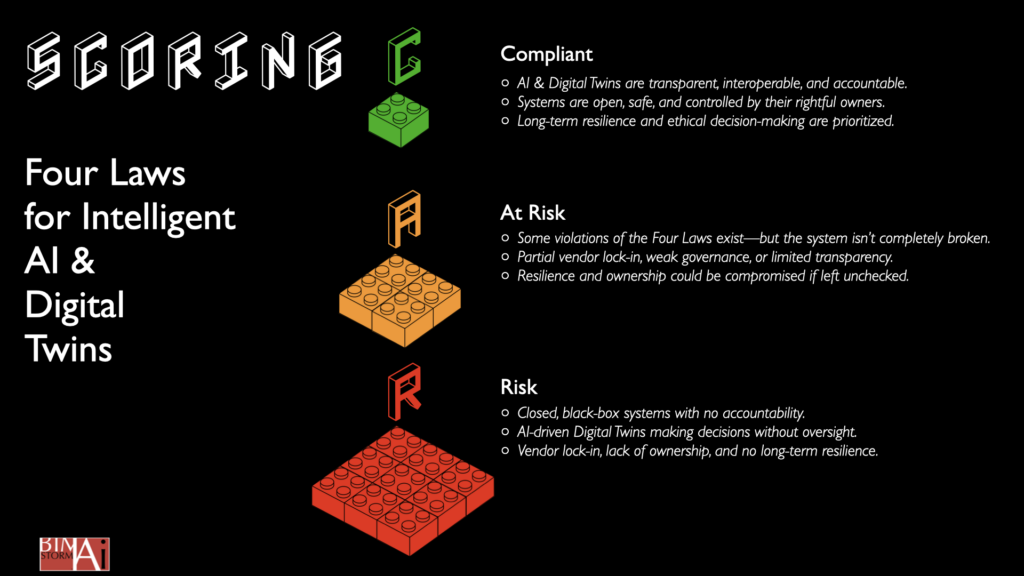

That’s why I’m introducing a scoring system to assess compliance with the Four Laws. This system will categorize AI and Digital Twin implementations into three levels:

• Compliant – Aligns with the Four Laws.

• At Risk – Shows warning signs, but not fully failing.

• Risk – Violates core principles and could be dangerous.

This scoring approach will be explored in more depth in upcoming posts, where we’ll analyze real-world AI & Digital Twin implementations and see where they stand.In the next post, we’ll cover real-world implementations and practical applications of these Four Laws. Where do today’s AI-driven Digital Twins stand? What are you thoughts? Post them on LinkedIn here.

Co-Authored with: Mike Bordenaro, Executive Director, Asset Leadership Network