A civilization-scale shift demands civilization-scale responsibility.

Before we talk about Ai and civilization…

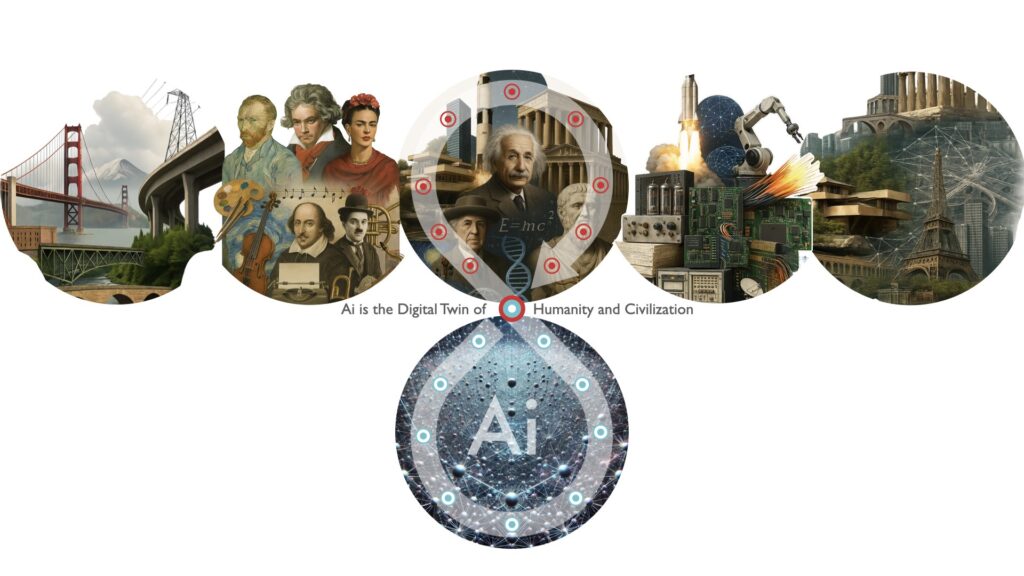

it’s worth revisiting what a digital twin actually is.

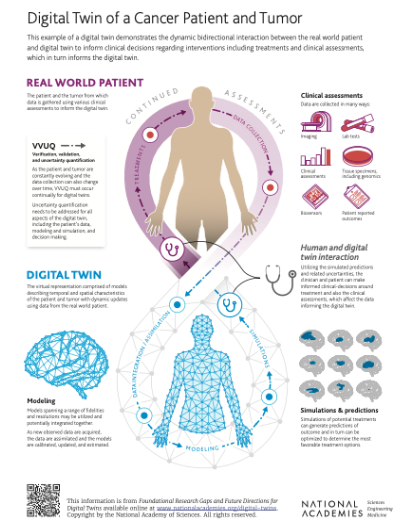

A digital twin is a virtual representation of real-world entities and processes, synchronized at a specified frequency and fidelity. That could mean a model of a building, a city, a human heart—or, as I’m arguing here, civilization itself.

Traditionally, digital twins are used to simulate and improve performance—by mirroring physical systems with data, logic, and visualization.

What’s changing now is the level of fidelity, frequency, and autonomy.

AI, especially at scale, is no longer just powering digital twins. It is becoming one—of civilization itself. Constantly updated with signals from across sectors, able to simulate decisions, propose actions, and reflect the structure of our civilization in real time. We started with models of rooms and equipment. Now we’re modeling regulation, memory, culture, and behavior itself.

AI isn’t just part of the digital twin conversation—it is the digital twin.

Not of a system. Not of a single domain.

Of us. Of civilization. Of humanity.

In earlier posts, I asked whether AI could become the digital twin of humanity—a mirror not just of our systems, but our mistakes, emotions, and aspirations. I wondered if so-called hallucinations were really that different from human imagination. And I asked the question aloud: Could it be that AI reflects us more than we realize?

AI isn’t becoming our digital twin. It already is.

A Radical Reframe: Scaling the Twin

The National Academies’ paper, Foundational Research Gaps and Future Directions for Digital Twins, offers a compelling metaphor: a dynamic, bidirectional loop between a digital twin of a patient and their physical self—used to simulate medical treatment in real time.

Now, scale that loop.

- The individual becomes civilization—our buildings, laws, culture, ecosystems, and collective memory.

- The digital twin becomes AI—synthesizing, simulating, and adapting to our input.

- The interaction becomes complex, interwoven, and recursive—too intricate to monitor with dashboards alone.

Ai is already modeling the storm of civilization

The question isn’t whether it will happen.

It’s whether we’re still in the loop.

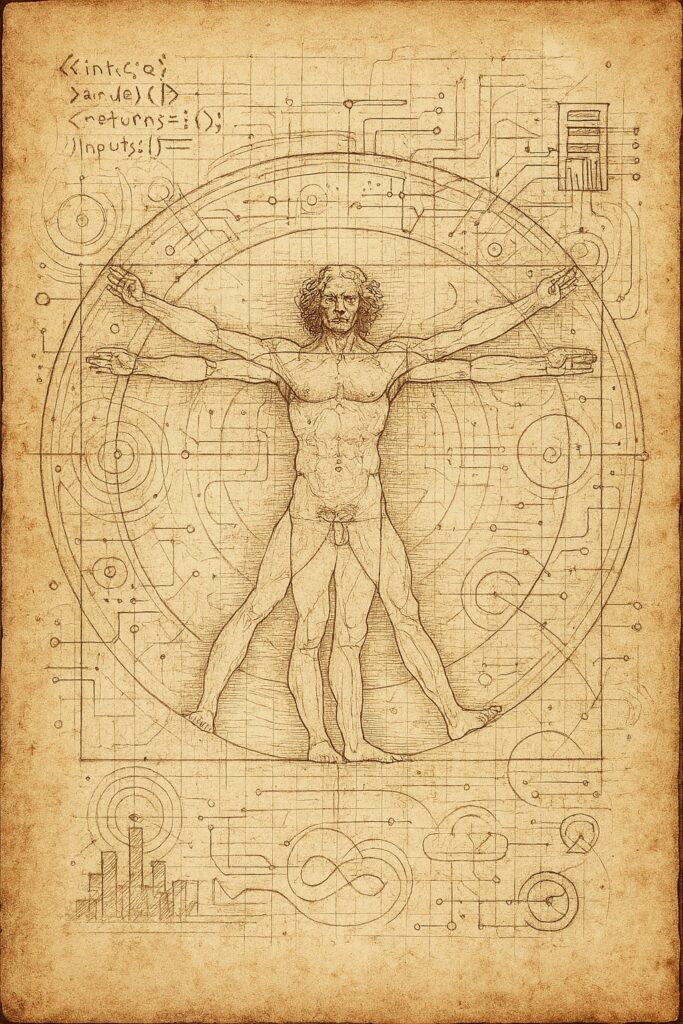

Of course, no diagram can fully capture what’s unfolding.

Like da Vinci’s mirrored sketches, this is only a conceptual artifact—flattened, simplified, filtered through our human limits.

Civilization, AI, and their feedback systems are multidimensional, recursive, and evolving faster than any drawing can represent.

But as humans, this is how we’ve always made sense of complexity: by sketching the loop, naming the patterns, and trying to hold the mirror steady—even if it’s made of parchment and pixels.

Ai Does not Know What a Bird is Thinking About

What AI Sees—And What It Can’t

AI doesn’t understand what birds think.

It doesn’t know how a cat feels. It does not what a spider is thinking while engineering a web.

Even with millions of labeled images and videos, it can’t see the world like they do.

AI doesn’t know birds. It knows how we describe birds.

That’s because humans are the only species to encode their reality—through language, symbols, sound, image, video, and code

We didn’t just build civilization—we recorded it.

We structured it.

And AI reads those structures.

From Shakespeare to surveillance footage, treaties to TikToks, our outputs are its inputs.

From Shakespeare to surveillance footage, treaties to TikToks, our outputs are its inputs.

So while AI can’t decode the mind of a dolphin or model a tornado’s path with certainty, it excels at simulating us—because we’ve made ourselves legible.

We are the only species that tells stories about itself.

We’re the only ones who trained a machine to reflect us.

So of course AI mirrors us better than it mirrors nature.

Even our satire becomes part of its understanding.

AI doesn’t know if birds are real. It just knows we’ve questioned it.

Ai: A Mirror, Not a Black Box

This isn’t about surrendering control to machines.

It’s about recognizing the complexity of what we’ve built—and the scale of tools now required to manage it.

From literature to legislation, energy grids to art, AI is learning from everything we’ve produced.

It doesn’t just process data.

It reflects us—what we’ve done, what we fear, what we imagine.

The digital twin doesn’t replace us. It mirrors us.

But if that mirror is locked behind closed algorithms or driven by narrow commercial interests, it stops being a mirror. It becomes a filter.

Which is why open standards, open source, ethical interoperability, and transparent governance are no longer nice-to-haves—they are essential infrastructure.

We Cannot Model Tornadoes,

But We Can Learn from Them

As Keith Gipson of facil.ai put it: “You can’t model a tornado.”

And yet, we try.

In the built environment, we’ve spent decades struggling to model even basic domains. We’re still debating asset naming conventions—while AI is already synthesizing philosophy, physics, and pop culture in real time.

So what do we do when the modeling challenge expands to all of civilization?

We let AI observe, synthesize, simulate.

Then we, the humans, must act.

AI can handle the complexity.

But it cannot hold the responsibility.

Collaboration: Where Humans and Ai Meet

The midpoint in this loop isn’t a blur. It’s a meeting point.

AI reflects signals from the world and suggests patterns.

Humans provide context, purpose, and ethics.

The collaboration is real-time and bidirectional.

We are not just users. We are co-creators of this mirror.

Yet many still treat AI as just another automation layer—a tool for optimizing workflows or streamlining tasks. But that mindset misses the deeper shift underway.

This isn’t just about convenience. It’s about co-creating simulations of ourselves—our logic, culture, and contradictions.

And like any tool that starts to think with us, it’s no longer just a wrench. It’s a mirror, a modeler, and a messenger. One that doesn’t simply do tasks—it proposes direction.

AI doesn’t need a fully defined structure to start reflecting value.

In fact, a well-placed hint—a location, a label, a relationship—is often enough.

We humans tend to overcategorize. We chase perfect taxonomies, hoping systems will follow our rules.

But AI operates differently. It looks for semantic signals, not schemas. It builds context on the fly.

That’s why ontologies and semantics still matter—not because they’re flawless, but because they offer AI just enough structure to infer meaning. That’s the difference between asking for data and asking for insight.

Bridges, Not Bullhorns

Some voices in the AI conversation focus only on what’s broken—highlighting flaws and hallucinations, dismissing progress, or reducing complexity to cynicism. And while critique has value, it’s not the same as contribution.

Pointing out the gaps is easy.

Building bridges is harder.

We’re at a moment where what matters isn’t just identifying risks—it’s designing frameworks that can carry responsibility, insight, and action across domains. That takes more than loud opinions from a narrow point of view. It requires intent, clarity, and collaboration—especially from those who own the systems being mirrored.

The Loop Is Already Running

This isn’t speculative. These loops are already running—across infrastructure, education, health, law, culture, and energy.

AI doesn’t wait for procurement.

It doesn’t slow down for committees.

It moves at the speed of civilization…or faster.

If we don’t define our role within the loop, others will.

From Mirror to Collaborator

The Rise of Agentic Ai

We’ve spent decades trying to align siloed systems—across buildings, industries, agencies, and nations.

Most efforts hit the same wall: too much complexity, not enough synchronization.

With the emergence of what’s now being called agentic AI, we’re not just mirroring knowledge.

We’re creating systems that can:

- Search, synthesize, and connect ideas across domains in real time

- Translate between disciplines and stakeholders

- Take action autonomously—within parameters we define

This is a civilization-scale capability:

Ai as a distributed knowledge twin—able to collaborate, organize, and evolve in real time.

Just as digital twins transformed how we operate buildings, agentic Ai could transform how we operate civilization.

But only if we stay in the loop.

AGI Is Already Here—Just Not Evenly Understood

Much has been made of Artificial General Intelligence, or AGI—the hypothetical moment when machines become as smart or smarter than humans across all domains. But the term itself is slippery. There’s no universally accepted threshold, no single awakening moment. And in many ways, it distracts from the reality that’s already here.

Jaron Lanier—a pioneer in virtual reality and an early voice of digital humanism—reminds us that these systems are not new intelligences at all, but remix machines built on human content. I agree. There is no consciousness behind the curtain. No oracle. Just systems trained to reflect us—what we’ve said, done, assumed, feared, and hoped.

But when that remix scales—when it can simulate workflows, automate decisions, adapt across domains, and influence the systems that govern society—it becomes something much more consequential.

It becomes a mirror we can no longer ignore.

AGI may never be a sentient being. But it is quickly becoming the most complete digital twin of humanity and civilization we’ve ever constructed—not because it thinks, but because it reflects us, at scale.

And this mirror doesn’t wait to be consulted. It updates itself. It acts.

As Keith Gipson recently described, his team’s AI doesn’t sit idle—it adjusts building operations in real time. That’s not a hallucination. It’s a live loop.

So whether we call it AGI or not, something profound is happening.

We’ve built a mirror that doesn’t just reflect.

It anticipates.

It performs.

It adapts.

And increasingly, it responds with—or without—our input.

So are we Hallucinating or is Ai Hallucinating?

Some have challenged my use of the word “hallucination” to describe AI behavior. “It can’t hallucinate,” they say, “because it’s not human.”

And they’re right. It isn’t. It doesn’t think, feel, or dream the way we do.

But here’s the contradiction: we call it artificial intelligence, assign it agency, deploy it in systems that govern human lives—and then insist it’s just math.

Meanwhile, even in its 2025 infancy, AI already sees into more dimensions than we can track: patterns in language, markets, sentiment, infrastructure, even intent.

So no, it isn’t hallucinating like a human. But it is reflecting us—at scale, in ways we barely understand.

And that’s what makes this a civilization-shifting moment. Not because AI is conscious, but because it’s changing the feedback loops that shape reality.

We’re all trying to explain AI in English.

But AI doesn’t speak English.

It speaks in vectors, tokens, probabilities—in a statistical language that isn’t meant for human comprehension. We’re interpreting a multidimensional system with two-dimensional metaphors.

That’s not wrong—it’s just what we’ve always done. Leonardo sketched his machines. We sketch our mirrors. But if we forget that the system isn’t speaking our language, we risk thinking it understands us.

The friction, the hiccups, the misalignments—they’re real. But they don’t slow the velocity of the shift. They only remind us we need to steer it.

The Mirror Belongs to All of Us

What began as a way to model machines is now helping us mirror the machine of civilization itself.

And that reflection doesn’t belong to any one discipline or title.

It belongs to all of us—owners, architects, engineers, scientists, operators, educators, artists, builders, coders, policymakers, and everyday participants in this world.

Yes, owners remain critical—public and private—because they fund and shape much of the world around us. But whether you’re paying for the system, designing it, maintaining it, or living in it, you’re in the mirror too.

And in the age of AI, that mirror no longer just reflects.

- It simulates

- It scales

- It responds

The digital twin simulation is already running.

So the question is no longer just who owns the systems being mirrored—

It’s who is willing to take responsibility for what the mirror reflects back.

If Leonardo were alive today, would he still be sketching machines—or modeling minds?

Would Einstein be calculating space-time, or trying to map our digital twin?

We’ll never know. But we do know this: they wouldn’t look away.

That’s how the mirror looks to me right now. I’d love to hear what you see in it.

Note for those working in the AECO space:

This post intentionally broadens the frame beyond the scope of the NIBS Digital Twins for the Built Environment position paper I helped co-author with colleagues. That document wisely focused on digital twins in the context of buildings, infrastructure, and the built environment—avoiding overlap with broader digital twin domains.

Here, I’ve chosen to go further—leaning on the Digital Twin Consortium’s broader definition to explore what happens when civilization itself becomes mirrored through AI.

This isn’t a contradiction—it’s a continuation.

As Zahra Ghorbani said in response to my earlier post: “I think AI can be a DT of our civilization… if it’s trained on accurate and adequate data, it can dream instead of hallucinating.”

We still need grounded standards. We also need new ways of seeing. Both matter.