How does the IoT really change the future of commercial building operations?

|

November 2014 |

[an error occurred while processing this directive] |

|

The Cutting-Edge of IoT How does the IoT really change the future of commercial building operations? |

The Internet of

Things World Forum just presented its new IoT Reference

Model, recognizing familiar building automation brands as leading in

the category of Edge Computing.

| Articles |

| Interviews |

| Releases |

| New Products |

| Reviews |

| [an error occurred while processing this directive] |

| Editorial |

| Events |

| Sponsors |

| Site Search |

| Newsletters |

| [an error occurred while processing this directive] |

| Archives |

| Past Issues |

| Home |

| Editors |

| eDucation |

| [an error occurred while processing this directive] |

| Training |

| Links |

| Software |

| Subscribe |

| [an error occurred while processing this directive] |

There were a

number of Internet of Things (IoT) community gatherings

held this October — GE’s

Minds & Machines, the IoT World Forum and

GigaOm’s Structure Connect — to name just those

with members of the

Industrial Internet of Things Consortium as headliners. GE,

Cisco, IBM and Intel took to the podiums, often showing their support

for the IoT Reference Model covered here. What are

the important

takeaways for commercial buildings automation?

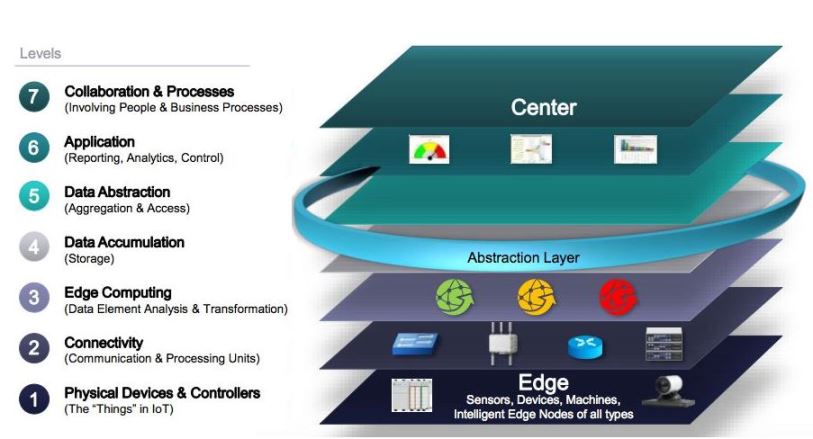

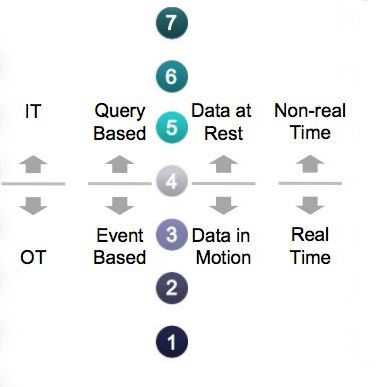

The model breaks down into seven functional levels the dozens of

technologies that, all combined, comprise the IoT: Devices send and

receive data interacting with the Network

where the data is

transmitted, normalized, and filtered using Edge Computing before

landing in Data storage / Databases

accessible by Applications

which

process it and provide it to people

who will act and collaborate. The

IoT Reference Model emphasizes Edge Computing — all the processing that

is expected to happen at the ‘Thing’ level, that is among all the

physical devices and controllers that now have microprocessors to

‘think’ and radios to ‘talk’ to one another.

Sometimes Cisco

has called this Fog Computing, playing on a

meteorological metaphor that draws a contrast with Cloud Computing.

It’s true, when devices use their data sharing and decision-making

capabilities to work together to suppress what is extraneous and

prioritize what is important, only select data makes it into the

Central data stores of Level 4 for further processing in the cloud.

It’s not practical to transport, store and centrally process all the

sensor data that can be collected from a typical piece of industrial

equipment, and there are obvious response-time, reliability and

security advantages to processing locally. Moreover, while it’s clear

that sensor-equipped edge devices can generate data much faster than

cloud-based apps can ingest it, the differential between the two modes

is unknown and varies depending on application. ‘Edge Software’ is the

name the model gives to any module that has evolved to configure,

address and directly process and temporarily store device data.

The Tridium Niagara framework, DGLogik graphics visualization software

and a number of telemetry provisioning toolsets were recognized during

the IoTWF presentation as pioneers in the Edge Software category. The

model also specifies a layer of abstraction between such Edgeware and

Cloud applications because there is so much variability above and below

to cope with. It calls this layer IoT Middleware and counts on it to

deliver agility and scalability and eventually interoperability.

The IoT events mentioned above had a wide industrial scope and were not

opportune for explaining how the IoT Reference Model can be used to

describe IoT-era information flows in the context of data-driven

Commercial Buildings, so I’ll take that opportunity here.

The careful wording of Data Element Analysis at the Edge and

post-data-cleansing Analytics in the Cloud recall the instructive

article by John Petze of SkyFoundry from May 2013, “Analytics, Alarms,

Analysis, Fault Detection and Diagnostics: Making Sense of the

Data-oriented Tools Available to Facility Managers.” As Petze lays it

out, it is useful to look at all these categories of data tools in

terms of data scope and processing location. Basic BAS system alarms

typically evaluate a sensor versus a limit, referencing one specific

point identified to the BAS system. They have narrow data scope and

react in near real-time with local processing at the device level.

There are now new controller products equipped with sophisticated

analytics engines that still do local, real-time processing, while also

tracking events against business and operational rules, thus offering a

historical perspective. In fact, Tridium just announced Niagara

Analytics Framework, a new data analytics engine native to the Tridium

Niagara controller that does just that. Another example of products

with analytics at the embedded-device level is the line of controllers

and IoT products from Intellastar.

The Petze article makes the distinction between the alarms and

analytics categories this way: “While an alarm might tell us our

building is above a specific KW limit right now, analytics tells us

things like how many hours in the last 6 months did we exceed the

electrical demand target? And how long were each of those periods of

time, what time of the day did they occur and how were those events

related to the operation of specific equipment systems, the weather or

building usage patterns.”

Edge Computing offers the promise of greatly improving the

signal-to-noise in results from another category of tool identified by

Petze - automated Fault Detection and Diagnostics. In a Building IoT

scenario, downstream terminal devices such as VAVs and other boxes

would message amongst themselves to determine if there is a problem

with their parent AHU and thus stop themselves from triggering hundreds

of extraneous faults. Tomorrow’s analytics will build on the ability to

capture such hierarchical structure in tagging conventions set by

industry standardization efforts like Project Haystack. Another good

reason to consider attending Haystack Connect 2015 next May.

Niagara Analytics Framework and Intellastar among others fulfill the

Edge Software role described by the IoT Reference model. There is yet

one more type of analytics software, this with Wide Data Scope, taking

in multiple data sets from sources beyond controller data, such as

energy data, occupancy data, weather sources, etc. The scope might also

widen to include security system or lighting data or personal

identifiers such as the data from location services built into mobile

devices such as the Apple iBeacon system. (Real estate leaders

reveal great enthusiasm for mobile connectivity investment in general

and location-aware technology in particular in recent interviews.)The

wider the data scope, the more sophisticated a device can be in terms

of controlling processes, executing rules and processing data.The

processing location can be local as long as the device executing the

rule can receive the data from contributing sources in a protocol it

understands. To date, many wide-data-scope analytics have featured

processing in the cloud because the real-time aspect was not a driving

concern.

John Petze’s article does emphasize that Building Operational Analytics

is a fast-moving field, and he does include a section on the ability of

analytics to ‘command’ a control system. This section points to

automated demand response as one application for analytics programs

that can drive real-time events across multiple edge devices.

[an error occurred while processing this directive]

As both the 2013 Petze article and the new 2014 IoT reference model

emphasize, analytics tools have to be presented with data in open,

accessible formats by the originating sources. Data collection today is

typically via Bacnet or oBix, Haystack, Modbus, etc., xml (perhaps

Green Button data), CSV file imports, and queries to SQL databases. In

the coming era of IoT, analytics will also collect data via M2M

protocols like MQTT (a messaging protocol) or DDS (peer-to-peer Data

Distribution Service) that were designed for small-device

communications, like sensor events.

The data that does make it ‘north’ moves into the cloud via IoT

Middleware, where it will be accessible by business applications. This

month IBM and Microsoft announced that they are working together to

deliver key IBM middleware such as WebSphere Liberty, MQ, and DB2 on

Azure, Microsoft’s cloud platform. This is an example of

Industrial Internet of Things Consortium members beginning to

collaborate to make this IoT model work. Real estate CTOs and

CFOs should cheer this announcement. For a number of years, they have

been working to consolidate the application software they use, such as

ERP and Workplace Management packages, into integrated IT suites. Now

they can benefit from that unified database architecture and cloud

apps. As Jim Whalen SVP & CIO of Boston Properties explains in a

recent interview, “Now we can move ahead with light mobile apps that

put data visualizations and different types of dashboards at the

fingertips of our tenants and staff.”

[an error occurred while processing this directive]

[Click Banner To Learn More]

[Home Page] [The Automator] [About] [Subscribe ] [Contact Us]