“Edge computing places networked computing resources as close as possible to where data is created."

Zach Netsov

Zach NetsovProduct Specialist,

Contemporary Controls

Contributing Editor

|

November 2019 |

[an error occurred while processing this directive] |

| Edge

Computing Explained “Edge computing places networked computing resources as close as possible to where data is created." |

Zach Netsov Zach NetsovProduct Specialist, Contemporary Controls Contributing Editor |

| Articles |

| Interviews |

| Releases |

| New Products |

| Reviews |

| [an error occurred while processing this directive] |

| Editorial |

| Events |

| Sponsors |

| Site Search |

| Newsletters |

| [an error occurred while processing this directive] |

| Archives |

| Past Issues |

| Home |

| Editors |

| eDucation |

| [an error occurred while processing this directive] |

| Training |

| Links |

| Software |

| Subscribe |

| [an error occurred while processing this directive] |

IBM’s

edge computing definition: “Edge

computing places networked computing resources as close as possible to

where data is created. Edge computing is an important emerging paradigm

that can expand your operating model by virtualizing your cloud beyond

a data center or cloud computing center. Edge computing moves

application workloads from a centralized location to remote locations.”

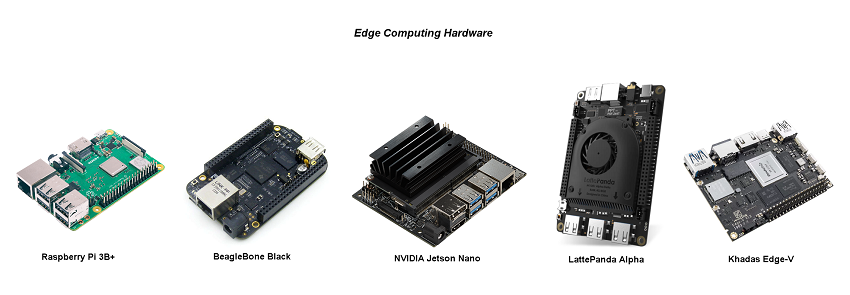

The advancement in embedded hardware performance

over the past few years is one of the main factors which has enabled

the rise of edge computing. Edge Computing is strongly associated with

cloud computing, IoT, and the application of small but powerful

embedded computing platforms such as SBCs (single board computers).

Even though edge computing hardware is defined by its location, and not

its size, small but powerful platforms make for more convenient

installations in a wider range of applications with lower power

consumption.

To understand computing on the network edge, we

need to reflect on the rise of cloud computing and IoT as these topics

are very much correlated. In recent years, cloud computing has become

one of the biggest digital trends, and mainly involves the delivery of

powerful computing resources to remote devices connected over the

Internet. We now see that increasingly, more devices accessing cloud

services are IoT appliances that transmit data online for

processing/analysis in the cloud. Connecting IoT appliances such as

cameras, HVAC equipment, or other building and process automation

equipment to the cloud facilitates the creation of smart buildings,

smart homes, smart factories, and smart cities. However, transmitting

an increasing volume of data for remote, centralized processing is

becoming problematic with the rapid rise of IoT. High data transmission

to cloud-based services can pose a load on available network capacity,

cause latency, resulting in a slow speed of response, and increase

cloud computing costs dramatically. These are some of the main drivers

for the rise of edge computing.

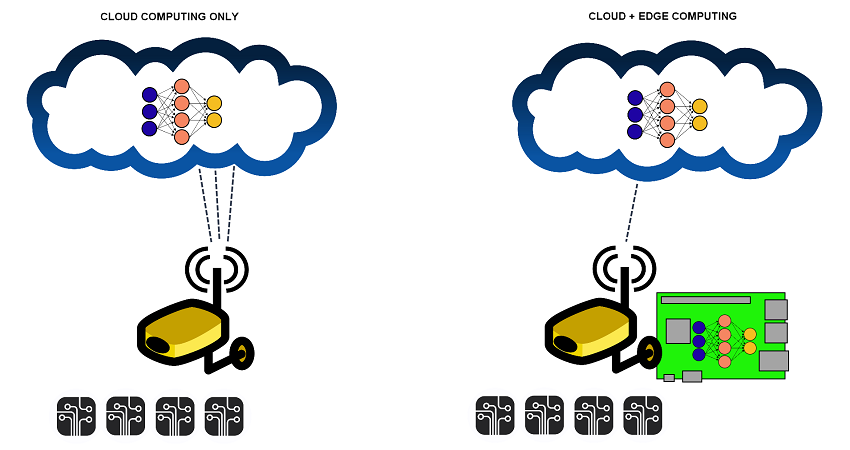

Edge computing allows devices that would have

relied on the cloud entirely, to process some of their own data

locally. For example, a networked camera may now perform local data

processing for visual recognition and respond accordingly, instead of

sending data it had captured to the cloud, waiting on the data to be

processed in the cloud, and receiving the processed response back from

the cloud. Eliminating this dependency on the cloud and performing

local data processing improves latency (the time is taken to generate a

response from a data input), as well as reducing the cost and

requirement for mass data transmissions associated with all cloud

services. Edge computing mitigates latency and bandwidth constraints in

new classes of IoT applications by shortening distances between devices

and the cloud services they require, as well as reducing network hops.

Edge computing is important due to the growing demand for faster

responses from AI services, the constant rise of IoT applications, and

the increasing pressure on network capacity. As we enter the next step

in digital evolution and increasingly utilize artificial intelligence

services for optimization of building and process automation equipment

in creating smart buildings and smart cities, data processing at the

edge will become even more critical.

Consider a neural network algorithm. Neural

network algorithms are very powerful once trained, but training them

requires processing large data sets (the more data fed to the neural

net, the more intelligent it will be), and very powerful hardware to

execute the training in a timely manner. Training could take weeks on

powerful computers depending on the complexity and size of training

data. Today, Amazon (Machine Learning on AWS), Microsoft (Azure

Cognitive Services), Google (Cloud AI Hub), and IBM (Watson) all offer

cloud-based voice recognition, vision recognition, and other AI

services that can receive data such as a still image, voice or video

feed, and return a cognitive response. These cloud AI services rely on

neural networks that have been pre-trained on extremely powerful data

center servers. When an input (data) is received, the powerful data

center (cloud) servers perform inference to determine what the

connected device is looking at. Alternatively, in an edge computing

scenario, a neural network is still trained on a data center server, as

training requires a lot of computational power, but the algorithm can

then be distributed to remote computing devices at the edge.

[an error occurred while processing this directive]For example, a neural network algorithm

used in a factory manufacturing PCB (printed circuit board) electronics

would be shown image examples of correctly produced printed circuit

boards and then examples of defective printed circuit boards, so that

it can learn to distinguish between the two. However, once training of

the neural net is complete, a copy of the neural network algorithm is

deployed to connected edge computing hardware, therefore distributing

the load and shortening the path to intelligence. This allows the edge

device to make cognitive decisions on its own and identify defective

printed circuit boards without transmitting any video data to the cloud

servers. Latency is therefore greatly improved, and the bandwidth

demands on the network are decreased, as data only has to be reported

back to the cloud when defective printed circuit boards are identified

in order to further train and improve the neural network algorithm.

This scenario of training a neural network centrally and deploying

copies for execution at the edge has amazing potential.

In the building automation space,

next-generation analytics software using cloud-based AI to predict and

diagnose issues without any human interference (requiring robust data

sets and sophisticated neural net algorithms) will soon be a reality.

Edge hardware can be useful in any scenario where the roll-out of local

computing power at the extremities of a network can reduce reliance on

the cloud. This becomes very important in mission-critical systems, or

in systems which cannot always be connected to the cloud reliably.

About the Author

Professional Information

Zach Netsov is a Product Specialist at

Contemporary Controls focused on the BASautomation line of products

which provide solutions for both small and scalable building

management. He received his BSEE from DeVry University with a

concentration in renewable energy. At Contemporary Controls, Zach is

part of the team that championed the design and creation of a BASpi I/O

board for Raspberry Pi.

[an error occurred while processing this directive]

[Click Banner To Learn More]

[Home Page] [The Automator] [About] [Subscribe ] [Contact Us]