|

November 2021

AutomatedBuildings.com

|

[an error occurred while processing this directive]

(Click

Message to Learn More)

|

[an error occurred while processing this directive]

AI

with all its promise to predict weather, floods, failure of machines

and disease before their onset, AI is finding applications in the

criminal justice system advising judges who to send to jail, invisible

AI in spam filters and auto correct is permeating our daily lives.

There is a discussion brewing about AI Ethics and its role in the

scaling of Artificial Intelligence in the world of technology loving

innovators.

AI

is trained by data from all around the world and it carries the biases

of our societies and cements it with complete lack of transparency.

So

there is a debate brewing about the need to regulate AI to ensure that

it does not unfairly affect minorities and people of certain races that

are not fairly represented in the rooms where AI is designed. This

includes women left behind in hiring algorithms, black people, queer

people and disabled people and more. On the other hand there are people

in the technology industry who argue about regulation putting the

brakes on innovating fast and call for no regulation at all.

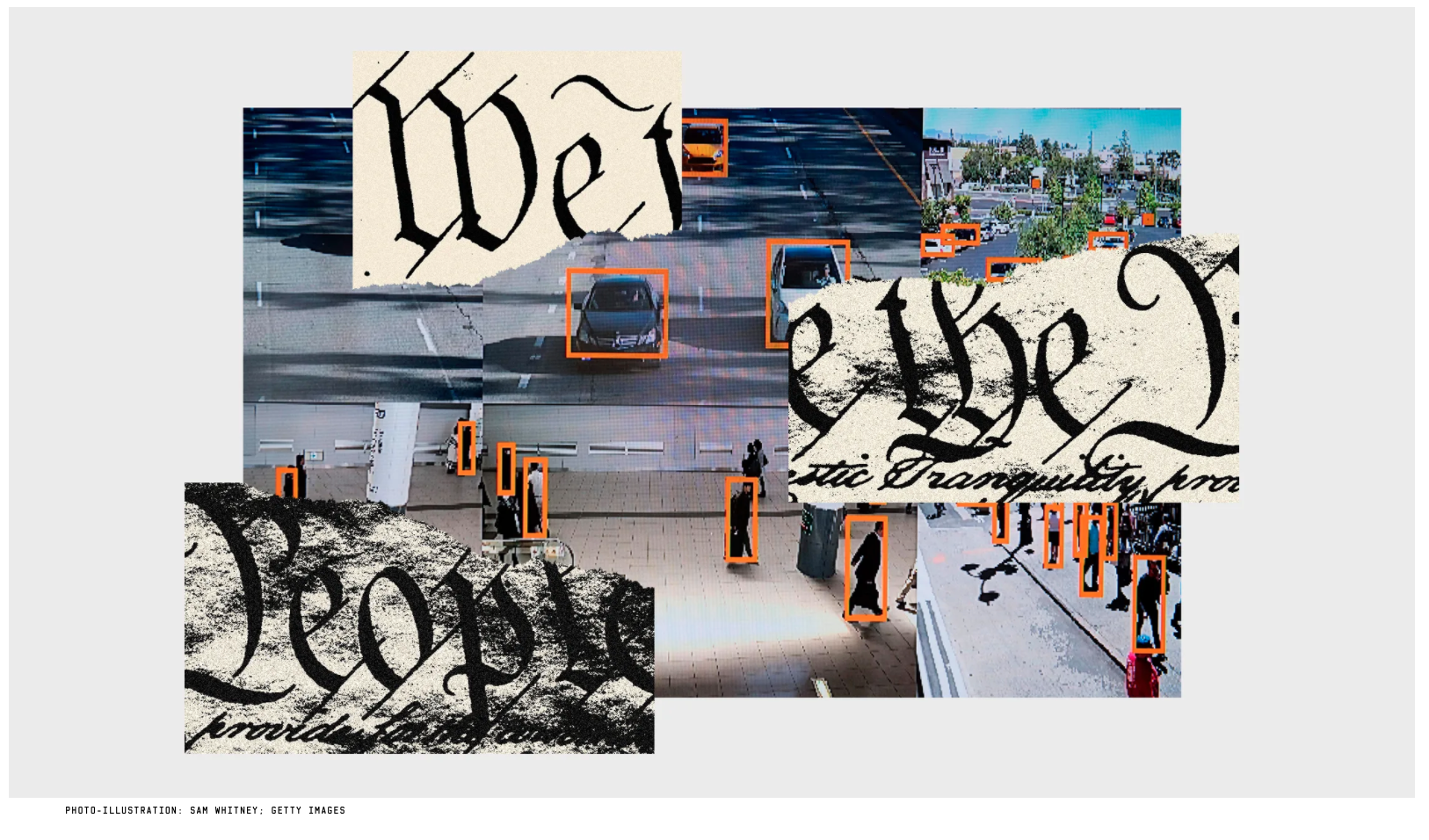

The US White House Office of Science and Technology Policy Science has called for a Bill of Rights for an AI Powered World in an opinion piece in Wired magazine.

It resonates with me as it articulates the pervasive power of AI and

the harmful effects of the bias in AI and real lives of people in

affects. They list what could be included in the AI Bill of Rights as:

1. your right to know when and how AI is influencing a decision that affects your civil rights and civil liberties;

2.

your freedom from being subjected to AI that hasn’t been carefully

audited to ensure that it’s accurate, unbiased, and has been trained on

sufficiently representative data sets;

3. your freedom from pervasive or discriminatory surveillance and monitoring in your home, community, and workplace;

4. your right to meaningful recourse if the use of an algorithm harms you.

The

US White House Office of Science and Technology Policy (OSTP) requests

input from interested parties on past deployments, proposals, pilots,

or trials, and current use of biometric technologies and HR

professionals using AI for identity and emotions tracking and inference

here. (before 5pm et Jan 15th 2022)

NATO has released their Artificial Intelligence Policy (here).

It sets standards of responsible use of AI technologies, in accordance

with international law and NATO’s values. This is welcome to ensure

that AI used in defence does not turn adversarial by malicious actors.

AI Bias Leaves No Human Behind

When

we look closer, AI bias is not limited to marginalized people for

anyone to think that it only affects 'the other." AI can be biased

against southern accents, or against people wearing a beard or glasses.

I recently hosted

a webinar on State of AI Ethics in Autonomous Vehicles at WeeklyWed at Business School of AI and

reviewed how Level 3 autonomous vehicles have to transfer control to

humans in the case of a disengagement and that means the AI has to

interpret if a person is alert or fit to take control, otherwise it is

a business liability for the Automaker and AV technology companies. And

from an AI ethics, Inclusive AI perspective, to paraphrase AI Ethicist

and Cognitive Science Researcher Susanna Raj,"there is no clear

construct of what 'able' or 'fit' means " to consistently define what

conditions an AI should track to handover control of a vehicle driving

at 60 mph among human drivers and passengers to another human.

Therein lies the answer to our debate about regulation vs. stifling innovation.

Innovation

is needed but not at the cost of human lives. AI Ethics affects all of

us in some situation or the other. So we need to embrace regulation

with meaningful debate on what is acceptable and right levels and to

retain agency of humans amidst the growing autonomy of AI.

Thus

we can ensure that AI Ethics is not a distraction so that AI does not

become a distraction in a future that works for all of us with all its

glorious promise.

Sudha Jamthe is a Technology Futurist and teaches AI and AI ethics at Stanford Continuing Studies and

Business School of AI. Her aspiration is to guide diverse professionals to build AI towards an equitable limitless world.

footer

[an error occurred while processing this directive]

[Click Banner To Learn More]

[Home Page] [The

Automator] [About] [Subscribe

] [Contact

Us]