|

September 2018

AutomatedBuildings.com

|

[an error occurred while processing this directive]

(Click

Message to Learn More)

|

“No thanks” right?

Don’t worry it’s not spying on you or judging you. Nor will it be

sending that video to anyone else for that purpose. The images

it’s capturing are only being used locally by the controller to improve

your indoor environment, provide security, and conserve energy. The

imagery that’s captured is processed directly at the device. Only

necessary information is extracted from the video stream and then all

unused data is discarded right there. It goes no further than the

device.

The only pieces of information your zone controller wants to know are:

- The approximate dimensions of the room.

- The number of human occupants.

- Their approximate locations.

- Their general level of physical activity.

- The lighting conditions in the room.

These

bits of information can be used for zone control applications much

better than current devices. Traditional control tasks such as

scheduling and occupancy can be accurately determined rather than

guessed. Say, for example, a school classroom has a full class

scheduled in the BMS system, but no one actually shows up that day.

Should the ventilation go to occupied airflow? Should the zone

temperature go to occupied setpoint? Doing so with nobody there is a

tremendous waste of money and electricity. A motion sensor might help

solve the problem to some degree. However traditional occupancy or

vacancy sensors rely on very good placement and sometimes constant

detected motion to stay active. Also, what if only a single person

walks in and then walks right out? Should the zone go active for that

whole period? What if everyone is in there, but they are stationary and

not moving around enough? Will the motion sensor go inactive? These are

the kinds of control issues contractors deal with every day.

Another

related issue is access control. Accessing a room can be an

attention-hijacking experience. In a simple system, a card or key fob

reader can be positioned at the entrances and exits to a

building. A single access control point is relatively easy to

deal with, but as additional layers of access are required within the

building more and more card readers need to be added. This is a less

than perfect system.

Zone

lighting control in a space can also be difficult. Especially if there

are multiple light sources such as windows or skylights. Often a zone

lighting system is dependent on a single discrete light level sensor.

Placement and orientation of this sensor is critical and sometimes

difficult to get right the first time. We once installed a new demo

lighting system that we were soon to be selling in our brand-new

office. The system worked well during the initial start-up and

commissioning, but after a few months of use, the dimming control began

to behave erratically in certain zones. The problem turned out to be

certain light level sensors were positioned in such a way that light

from nearby windows hit them directly as the seasons changed. This

caused the feedback loop for those individual sensors to respond

incorrectly either by dimming the lights completely off or fluctuating

on and off. The problem here was that the value from a single sensor in

one location was representing the entire room.

In

all of the above situations, a video image of the room would do much

better and could clear up the confusion and ambiguity that are

consequences of using individual discrete sensors. A single inexpensive

miniature camera can replace the motion sensor, light level sensor, and

access controller. There would be no need to install these separate

sensors and clutter up walls and ceiling space, as well as require long

wire runs through the walls. Instead of being able to receive only

single localized detected values, you can instead view the space in a

complete context. A video image of space provides large amounts of

contextual data. If only there was a person available to continuously

view the video and make the control adjustments all day. Instead of a

live person, we can now perform tasks such as these with edge-bots!

Traditional

cameras used for security purposes do not usually locally store image

streams for particularly long periods of time. Video data is typically

sent across networks to a centralized recording device. If any kind of

artificial intelligence processing were to occur, it would have to be

at that central device. This architecture can limit the total number of

possible camera equipped zone controllers, as streaming video is the

most bandwidth intensive type of network communication in a building

automation system. Especially if the images are to be processed for

control information and then sent back to the controller in real-time.

For many large buildings, it would be impractical to have image

streaming for every single zone to a central location. It would make

much better sense to process the imagery right there at the edge device.

This

is a perfect use case for the implementation of Machine Vision

and Deep Learning in edge devices. Machines are

programmed to recognize objects much in the same way we recognize

objects; through the use of Neural Networks. Recent advancements in

image recognition have allowed not only for the correct recognition of

single objects alone by themselves, but also the detection of multiple

instances of the same kinds objects in images with other types mixed

in. The process by which useful image recognition software is created

for use in machine vision is called Deep Learning. This process

consists of two stages; training and inference.

An artificial software neural network model is created and then trained

using standardized data sets. In this case, we would be using an

annotated dataset containing images of people such as the COCO dataset.

These software models are not trained at the edge device but rather on

a server with far greater resources, as this is the most

computationally demanding of the two stages. Once trained and

optimized, the software neural network model is then ready to be

deployed to recognize images on its own through inference at the edge

device. Dividing these two tasks in this way eliminates the need to

stream video data for processing across a network to a central

location. Since video images are not sent any further than the

edge device, this also may help alleviate some privacy concerns.

[an error occurred while processing this directive]To

actually enable edge processing, we need suitable edge controller

hardware components. The problem in the past was that a device with the

processing power required to construct such hardware at a reasonable

cost did not exist. Most legacy HVAC zone controllers are based on microcontrollers

which are simple, low-cost, single-task devices. These processors

usually only have enough resources to run simple small-footprint DDC

code and not much else. The situation has now changed in recent years

with the wide availability of low-cost embedded microprocessors.

These devices sometimes referred to as System on Chip or

(SOCs) are much more like miniature desktop PCs that use full operating

systems such as Linux, and can run multiple programs concurrently. This

multitasking capability allows the device to easily run DDC programs as

well as other applications such as Haystack servers or AI processing

simultaneously. The prices of these chips are beginning to drop so

drastically that the other day a complete embedded ARM-Linux PC was announced that would only cost a

few dollars!

These

miniature computers can easily run traditional DDC code as well as

other applications, but the processing of voice and image data through

neural networks could use the assistance of specialized processors.

This summer Google announced the upcoming release of an Edge

Development Kit to accelerate the development of an AI edge-bot

style device. The offering is possibly an answer to release of

competitor NVIDIA’s Jetson Development Board. The Google

processor board contains a special integrated circuit called the Tensor Processing Unit (TPU). This chip is a

specialized co-processor that is devoted to computational tasks related

to artificial intelligence and machine learning. The kit resembles a

Raspberry Pi in size and layout with the main difference being that the

portion containing the main processor, flash memory, RAM, and TPU are

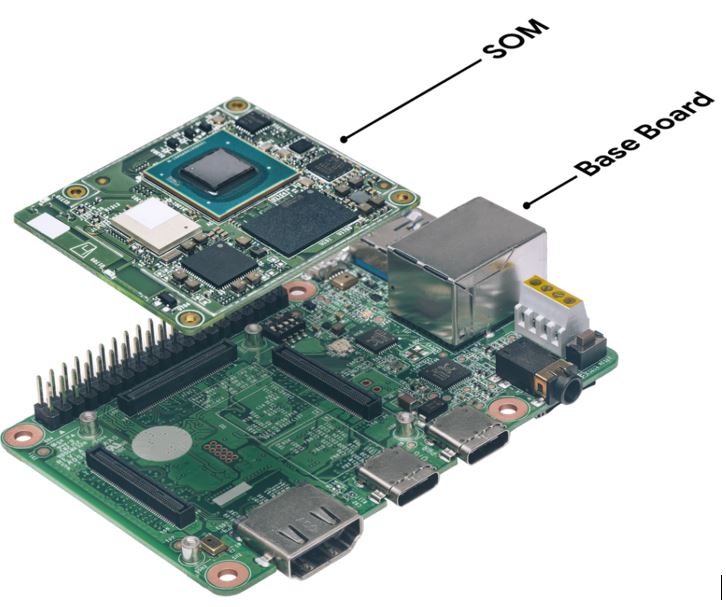

located on a single detachable module. This System on Module or (SOM)

is ready to be plugged into your own product’s baseboard when you are

ready to transition from development into a production device.

Tensorflow

is an open source software project that provides code libraries that

are well suited toward deep learning neural network development.

Programmers are familiar with traditional basic data types such as a

“char” “int” “string” or “float.” A Tensor is just another

data type.

The format of a tensor is that of a multidimensional array.

This

data-type format is convenient for representing and processing complex

vector-based information which flows through various operations in

neural networks. Traditional processor hardware architectures are not

well suited for performing operations on vectors and matrices. To more

easily manipulate tensors the hardware architecture should be able to

perform matrix-multiplication which repeatedly occurs as

the tensor

passes through layers of the network. The Google TPU chip provides this

hardware acceleration on the board which helps offload neural network

processing tasks. Having a co-processor allows the main modules

processor(s) to easily manage other non-vector based scalar

applications that are typically seen in building automation software.

All

of these board sub-components combine together to create the

foundation for the ultimate Open-Software, Open-Hardware, Edge

controller. This can be done, and it can be done at a reasonable cost.

Claims like this may seem like science fiction. If it were so easy to

build one of these devices, why has no company already offered such a

product? These concepts may sound far-fetched, but in fact, all of the

necessary components that would be needed for even a hobbyist to

implement an edge-bot zone controller are here and readily available

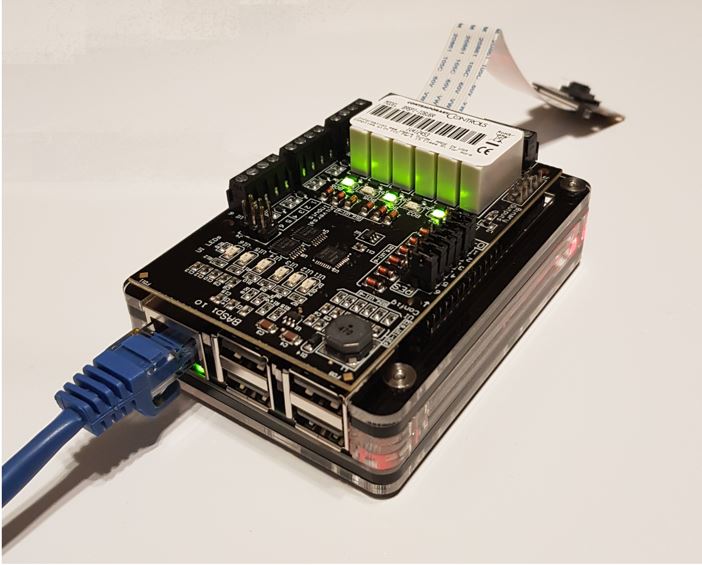

right now. For example, a Raspberry Pi can function as your main

processor board and can be had for less than 30 USD. Interestingly,

developers for Tensorflow recently announced full Raspberry Pi

support. A controller needs some I/O, for this you can use

Contemporary Controls BASPi add-on- board which will also at the same

time give you open-source Sedona DDC control. The Pi NoIR camera can be

your edge-bot’s eyes also for less than 30 dollars. You can learn how

to implement machine vision using OpenCV which is a free open source

software library to get you started on your machine-vision development

project. Indeed, the above pieces would give you all of the hardware

and most of the software to make an edge-bot. So if all of these bits

and pieces are available right now why has an edge-bot not yet been

created?

Editor's notes: This Memoori report adds strength to Calvin's words The Competitive Landscape for AI Video Analytics is Intense

Published: August 27th, 2018 We have identified some 125

companies that are active in supplying appropriate semiconductor chips

and AI video analytic software products. New companies are being added

to this list almost weekly and although we cannot claim that it

includes all suppliers it is probably today the most comprehensive

listing on this subject.

About the Author

Calvin

Slater is a U.S. Navy Veteran and Graduate of UCLA with a BS in

Mechanical Engineering. He has spent eight years in the Building

Automation Controls Industry, and is highly interested in Embedded

Hardware as well as Open-Source building automation software frameworks.

footer

[an error occurred while processing this directive]

[Click Banner To Learn More]

[Home Page] [The

Automator] [About] [Subscribe

] [Contact

Us]

Calvin Slater

Calvin Slater